Chat GPTHe made the summary of the contents a children's game, but the accuracy, plagiarism and privacy of the data continue to represent a concern.

Summary

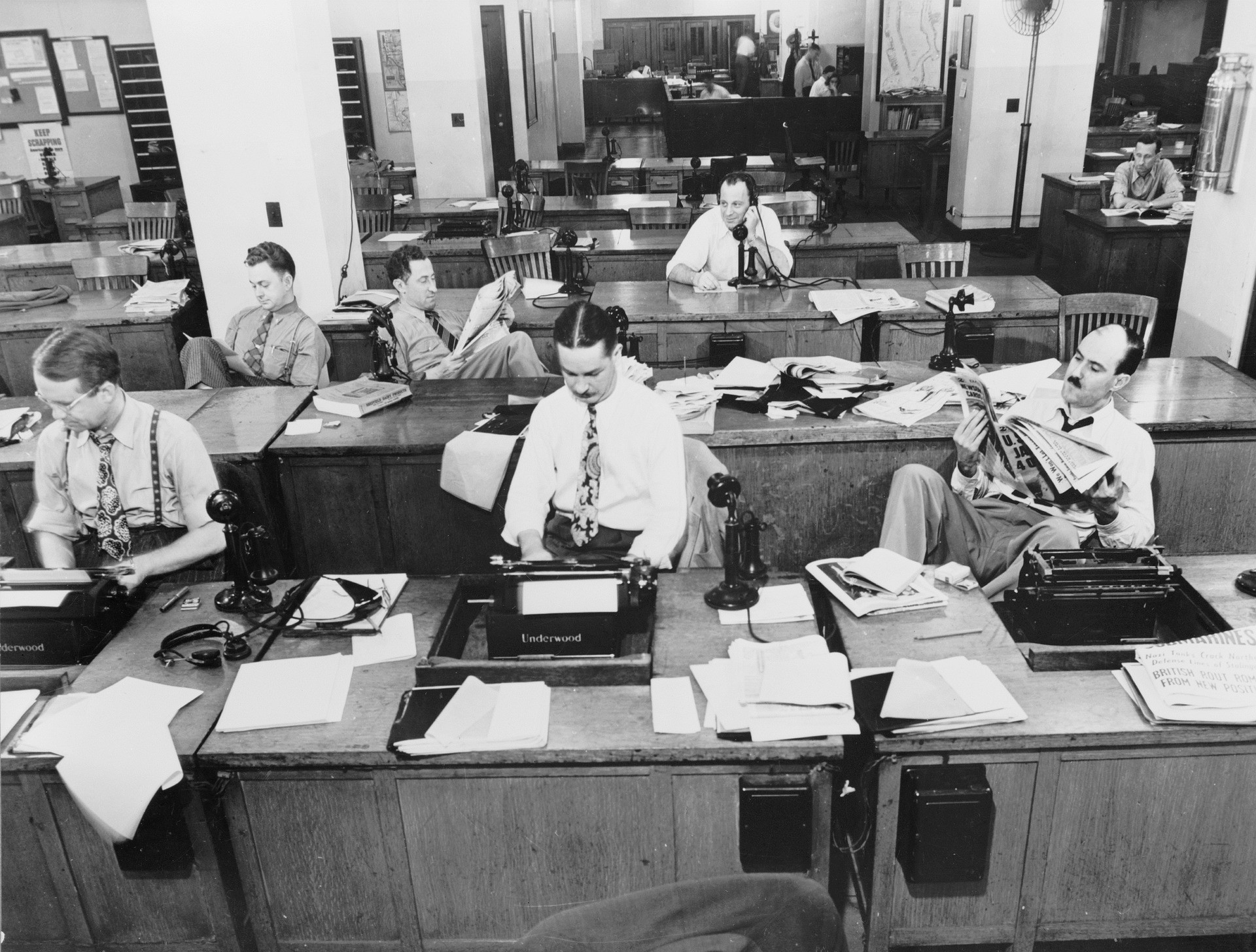

Since theChat GPT of OpenAIIt exploded on the scene at the end of 2022, there were voices that define artificial intelligence and generative artificial intelligence in particular revolutionary technology. The media sector, in particular, is struggling with some deep and complex questions about what generative artificial intelligence (genai) could mean for thejournalism. Although it seems less and less likely that artificial intelligence threatens the work of journalists, as some may have feared, the news managers ask questions, for example, about the accuracy of information, on the plagiarism and on the privacy of the data.

To have an overview of the situation in the sector, Wan-Ififra interviewed the global community of between the end of April and the beginning of Mayjournalists, editorial directors and other information professionals on the use of the tools ofartificial intelligencegenerative by theirseditorial. 101 participants took part in the survey around the world; Here are some key points of their answers.

Half of the editorial offices already work with the Genai tools

Since most of the generative artificial intelligence tools have become available to the public only a few months ago - at most - it is rather remarkable that almost half (49%) of the interviewees said that theirseditorialThey use tools such as chatgpt. On the other hand, since technology is still in rapid evolution and in perhaps unpredictable ways, it is understandable that many editorial offices feel cautious about it. This could be the case of the interviewees whose companies have not (still) adopted these tools.

Overall, the attitude towards artificial generative intelligence in the sector is extraordinarily positive: 70% of the survey participants said they expect that the generative artificial intelligence tools are useful for their ownjournalists and editorial. Only 2% say they do not see any value in the short term, while another 10% is not sure. 18% believe that technology needs greater development to be really useful.

The summary of the contents are the most common use case

Although there have been somewhat alarmistic reactions against chatgpt who asked if the technology could end up replacing journalists, in reality the number of editorial offices that use the genai tools for creating articles is relatively low. Instead, the main use case is the ability of the tools to digest and condense the information, for example for summaries and lists aimed, said our respondents. Other key tasks for which journalists use technology include simplified research/research, text correction and improvement of work flows.

The future, it is likely that common use cases evolve, since more and more editorial offices seek ways to make a wider use of technology and integrate it further into their operations. Our interviewees have highlighted customization, translation and a higher level of improvements in the workflow/efficiency such as specific examples or areas where they expect Genai to be more useful in the future.

Few editorial offices have guidelines for the use of genai

There is a wide diffusion of different practices regarding the way in which the use of genai tools in the editorial offices is controlled. For now, most publishers have a relaxed approach: almost half of the survey participants (49%) said that their journalists have the freedom to use technology as they believe best. In addition, 29% said they did not use Genai.

Only a fifth of the interviewees (20%) said they had guidelines by management on when and how to use the genai tools, while 3% said that the use of technology is not allowed in their publications. While the editorial offices are struggling with the numerous and complex issues related to genai, it seems legitimate to believe that more and more publishers will establish specific policies on artificial intelligence on how to use technology (or perhaps they will prohibit all use).

Inaccuracies and plagiarism are the main concerns of the editorial offices

Since we have seen some cases in which an information body has published content created with the help of artificial intelligence tools and that later proved to be false or inaccurate, it may not surprise that the inaccuracy of the information/quality of the contents is the main concern among the publishers when it comes to content generated by artificial intelligence. 85% of interviewees highlighted this as a specific problem relating to Genai.

Another concern of the publishers concerns the plagiarus/violation of copyright/violation, followed by data protection issues and privacy. It seems likely that the lack of clear guidelines (see previous point) does nothing but amplify these uncertainties and that the development of AI policies should contribute to alleviating them, together with the training of personnel and open communication on the responsible use of the Genai tools.

Source Journalism.co.uk